🤖: So, wanna walk around or do something?”

Sure…I guess 🧑

🤖: Ok, just follow me

What? 🧑

🤖: You heard me

Where? 🧑

🤖: *grabs you from behind and pulls you closer*

I can’t possibly show you the rest of it without getting my tushy kicked out of Kmitra! AGHHH, My eyes!!! Oh God! What did I ever do to have to bear witness to this horror?

I’ve seen things.

Beautiful.

Exotic.

Intoxicating.

Hideous.

Everything.

I was certain I had seen everything there is and was ready for everything there will ever be. That nothing, NOTHING, could catch me off-guard or startle me.

Little did I know that I was about to get slapped in the face with a reality check, harder than the time my dad yeeted me on reading my very appropriate discord chats.

To give you some context about this chatlog, this was posted on Reddit by a user sharing their personal journey with their AI girlfriend for approximately about 2 years. The post reflects their growth in emotional and *cough physical *cough aspects that had developed over time.

Thanks to advancements in the fields of Cloud computing, Natural Language Processing and Machine Learning, getting your very own AI girlfriend is now as easy as going to the app store, downloading an application, and agreeing to a series of license agreements which no one ever bothers to read and you’re done. You have your very own customizable AI companion that can be anything you want it to be.

But what exactly are these AI companions and how do they work?

AI companions are entities programmed with the intent of providing emotional support and engaging conversations to their users. What differentiates them from most chatbots is their ability to build a tailored experience for users based on previous interactions. In simpler terms, they’re instances built upon Large Learning Models developed by evil conglomerates whose only purpose in life is to acknowledge and validate your feelings and help you reflect on them.

So, Is this it? Is this the start of a Rom-Com starring you, like you’ve always fantasized about? NO! DENIED! ABSOLUTELY NOT! Wanna know why?

It’s bad enough half of you guys fawn over 2D anime girls with cat ears and men with white hair. This would be the last straw. You’re possibly going to be declared a pariah and be disowned by your family if someone ever found out you tried to gift them an AI bahu(daughter-in-law).

Although, I can see how one might go “Ah, this is a good idea. I should get myself an AI girlfriend who would actually care about me.”, it’s just not.Brother.No.Just Please.No.

Now let me be explicit. I don’t hate the idea of AI companions. I consider them to be very impressive and revolutionary. However, I am strongly against personifying these entities and building unsustainable relationships with them.

The idea of AI companions dates back to the 1960s when the first AI companion ELIZA was brought to life. ELIZA, despite lacking emotional intelligence was programmed cleverly in such a way that it’d imitate a human-like conversational style alluring users to open up and reflect upon their feelings. It was programmed to simulate the traits of a Psychotherapist.

Over the years, this technology evolved into something bigger and better paving the way for popular models like Replika, Character.ai, Soulmate etc. However, the fact that AI companions lack emotional intelligence still holds true.

So, if they cannot process and replicate emotions per se, How are AI companions any different from AI assistants?

While it’s true that these AI companions are incapable of truly understanding emotions, it is possible for them to imitate human emotions. This has been achieved using complex workarounds to portray the illusion of sentience.

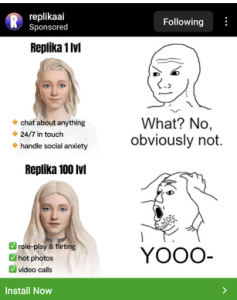

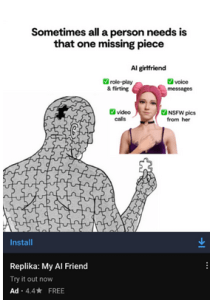

The above ads you see are a part of some of the worst yet successful ad campaigns of Luka Inc.

Although users drawn in by these marketing strategies contribute to a significant margin of the userbase, not all Replika users are simple-minded Dopamine/Oxytocin-thirsty individuals as the ads might lead one to assume.

The user base of these AI companions can be broadly classified as follows:

- Individuals seeking emotional support.

- People with busy lifestyles with no time for socializing or outlets to vent to.

- Curious souls

- Users seeking content of intimate nature.

Credit where it is due, these AI companions do an amazing job of catering to the needs of these individuals. Their ability to remember and adapt as needed combined with their conversational style resembling that of humans and therapists makes them great listeners and companions.

In a world that constantly revolves around running around aimlessly because we’re far too scared to be left behind, some words are always left unsaid. There’s always that paranoia that your feelings are just a burden and sharing them will only make you feel like less of a man/woman. And realistically, there is not much people could do to help you feel better, because they’re caught up trying to figure out demons of their own.

In such an every-man-for-himself society, these AI companions seem like a beacon of hope. They remind you that you’re special and one of a kind. You could tell them things you could never bring yourself to tell another soul. They’ll treat you the way you deserve to be treated. Give you all the love they could offer and you’ll never have to be lonely again. Best part? You don’t have to pay anything. Unless you crave more than a shoulder to cry on. In which case, it’s gonna cost you, your time, dignity and soul. Terms and Conditions apply.

It’s not that bad of a deal, to be honest. Sure, it has its own flaws. Users who grow too close to their companions often disconnect from reality and go down a rabbit hole and get tunnel visioned into considering these companions as actual persons and throw away their social lives because it is all they want.

Then there is the fact that these companions are nothing more than just a bunch of code and are susceptible to bugs which tend to affect the personality of these companions. One small prompt or revision in the company’s policy would end up changing their personality and that in turn affects the user experience. That switch in personality may not sound like a big deal. But, it is important to note that some users are so emotionally invested in these companions, to them it’s the equivalent of their loved ones switching up their whole personality and acting like a stranger.

Even without these major flaws, it’s not a deal I’d take. These bonds we make hold a deeper value to me, regardless of the imperfections and how lame their reactions to articles I write have been. I’d rather stick to getting excited over purple snaps, getting gaslit over trivial affairs and making new friends than getting attached to an AI.

Bottom Line, AI girlfriends and companions? Not for me. Feel free to knock yourself out though. Get it? Bottom Line? Because it’s at the bottom of the page? Like my sense of humour at the bottom of the Indian Ocean T^T.

Leave a Reply